A caution against customized AI in healthcare

Algorithmic constraints on accessible information can lead not only to echo chambers but also to discrimination, bias, loss of decision autonomy, dependence on AI systems, and diminished human judgement. Further, as AI systems evolve into more independent “agents” capable of handling complex tasks with minimal oversight, the impacts of customization will increasingly manifest in the real world. Rather than confining these concerns to the realms of social media or politics, AI policymakers should consider a broader range of domains where AI customization increasingly shapes human exposure to information. Efforts to make AI inference more inclusive focus on mitigating algorithmic bias or balancing representation in training data sets; but more research and policy should focus on AI model and interface designs that encourage wide-ranging and analytical perspectives, especially for systems that significantly affect human safety and well-being. Below are three recommendations for AI development and policy initiatives to help cultivate “constructive friction” in AI systems and encourage the creation of AI that not only captivates but also fosters critical thinking and broad perspectives.

Leverage decision science to optimize for critical reflection

Generative AI systems now allow users to ask questions in natural language and typically receive a “best fit” result in a seamless, conversational thread. As developers work to ensure responses are accurate, the demand for accuracy among consumers remains complex. While users may value accuracy, their online behavior suggests a stronger preference for information that aligns with their beliefs and preferences. Even this is not straightforward, as consumption habits are influenced by interface design features in ways that are not well understood6. The new proliferation of AI chatbots offers a natural testbed to experiment with these tendencies on a massive scale to identify forms of information delivery that promote critical evaluation of misinformation and consideration of alternative perspectives, without fundamentally compromising sustained user engagement.

A wealth of decision science and behavioral economics literature can help to design such experiments. For example, empirical research shows that the way choices are presented (i.e., “choice architecture”7) can significantly influence decision making. Presenting multiple distinct options aids informed decision-making, but an excess can increase cognitive load8. Further, humans imperfectly evaluate information and make decisions influenced by cognitive and emotional biases and contextual factors (i.e., “bounded rationality”)9. With generative AI systems rapidly replacing traditional search engines for building knowledge, policymakers and Big Tech should partner to promote consumption of diverse content, combat confirmation bias, and foster social unity. Strategies could include integrating alternative perspectives into outputs or using design features like accordion menus, pop-up windows, and progressive disclosure buttons to enhance awareness of contextual information. A vast array of experimental designs from user experience (UX) testing (e.g., A/B testing; conversion rate optimization; multivariate testing) can help to identify decision architectures with positive versus negative impacts on critical thinking.

Ensure intentionality

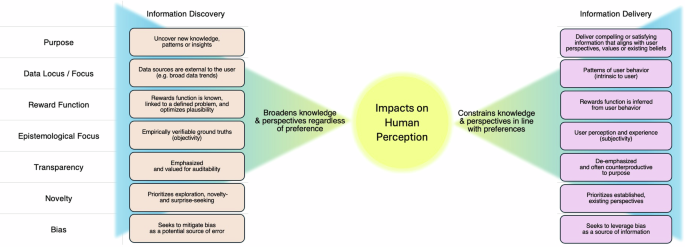

Not all customization is harmful. It is essential to differentiate between using algorithmic customization approaches (e.g., unsupervised learning, latent pattern mining, anomaly detection, dimensionality reduction, etc.) to discover verifiable knowledge versus to adapt the delivery of knowledge to ensure palatability and user acceptance. This distinction (illustrated in Fig. 1) can help evaluate the rationale behind customization and align it with application goals.

This figure presents distinctions between AI systems customized for information discovery and those for information delivery across several dimensions and highlights the impacts of these AI design approaches on human perception.

Consider an AI-powered radiology tool that identifies relevant areas of a medical image and tailors outputs in line with a radiologist’s preferences and past interactions with the system. The design choice to emphasize physician engagement is based on an appreciation that the uptake and utility of an AI tool depends not only on its performance but also on the degree to which users trust it. Emerging research suggests that user trust is partly influenced by whether an AI system considers a user’s preferred variables and ways of receiving or visualizing outputs10,11. Leveraging these insights, novel AI systems for medical imaging analysis (e.g., Aidoc; PathAI) use customization to adapt workflows to mirror how individual radiologists prioritize cases, highlight abnormalities, and generate reports. Similarly, medical “scribes” that automate physician notetaking (e.g., DeepScribe) can be personalized to adapt to physicians’ speech patterns, medical terminology preferences, and summarization styles that match a physician’s preferred level of detail and prioritized information, and perform selective information retrieval (e.g., from electronic health records) based on a physician’s regularly referenced information. Clinician-facing medical chatbots (e.g., OpenEvidence) designed to deliver clinically relevant literature, evidence-based guidelines, and decision support summaries at the point of care are using customization to align outputs with patterns in a clinician’s queries and preferred sources. Other emerging AI tools in healthcare (e.g., for algorithmic risk prediction; patient scheduling; patient monitoring early alert systems) likewise feature customized information delivery.

While tailoring outputs may lead to greater uptake of tools that ultimately enhance diagnostic efficiency and precision, it may cause physicians to miss critical information if preferences do not reflect variables with the highest predictive value for treating disease, or if preferred outputs constrain a physician’s ability to consider the wide range of information necessary to make informed decisions. Further, outputs from AI-based medical scribes may embody a physician’s unique expertise but also their biases. These biases may then be documented and perpetuated in a patient’s medical record in ways that shape (and potentially constrain) the perspectives and understandings of future physicians.

We should be designing AI tools for healthcare and other high stakes fields that work to offset, not perpetuate, human biases. Given the variability in how physicians interpret and respond to clinical information, hospital governance—as well as developers and policymakers—should establish limits on AI tool’s accommodation of user preferences, balancing trust, engagement, and other objectives like accuracy or comprehensiveness. Further, hospitals should require regular audits of AI performance impacts on clinical decision making and health outcomes to ensure critical variables with high predictive value are not overlooked in favor of user preferences. These checks and balances are likewise critical in other sectors where fairness, standardization, or impartiality are principal considerations (e.g., national security; legal rulings; standardized testing). In these contexts, developers should prioritize design elements that enable users to access “ground truths”—accurate, verifiable information that reflects reality (e.g., the severity of a patient’s disease, the full range of security threats). Depending on its aim, customization can either lead us closer to or further from these ground truths, wielding a power to constrain human perception as much as it can expand it.

Customization thus requires caution and intentionality, supported by rationales that align with responsible uses of AI across different domains and settings. While all forms of information delivery, even books, guide our attention, the distinguishing aspect of AI that demands closer scrutiny is the degree to which reliance on AI over other sources of knowledge will influence human perspectives over longer timescales. The widespread integration of generative AI marks the first time that humans are relinquishing responsibility and control over generating and curating knowledge to a non-human entity, with uncertain impacts on human progress. Intentionally programming these systems to restrict the information we ingest must be done with utmost care. While we have ample evidence of the harmful impacts of content filtering in social media, we do not yet know the harms of taking this approach with generative AI. Now is the time to anticipate such consequences. Developers could be incentivized to disclose the potential consequences of customization. Modeled after “social impact statements” that prompt developers to consider the effects of AI systems on different groups or individuals, “design impact statements” could encourage developers to transparently evaluate how curating information for engagement, acceptability or other reasons might inadvertently exclude important information or perpetuate existing biases. Developers could also highlight supplementary design choices to ensure that users are made aware of domain-critical information, even if it does not align with user preferences or past interactions. Clear documentation of personalization parameters across use cases can also help to build an evidence base of impacts.

Prioritize unconventional thinking

Generative AI stands out from previous iterations of AI for its significant potential to expand the boundaries of human cognition and transcend conventional modes of thought. Despite being trained on existing knowledge, GenAI can produce entirely novel content. It does this by using two main deep learning techniques, generative adversarial networks (GANs) and transformers, which together enable AI to create unique material that is not just a copy or remix of existing data. Various forms of reinforcement learning can encourage GenAI to “think outside of the box.” Building “intrinsic rewards” into a system, like rewarding novelty- and surprise-seeking, can encourage GANs to explore new possibilities and deviate from existing expectations. Similarly, shaping rewards can help to gradually shift an algorithm’s focus towards more complex or unexpected outcomes. Incorporating other motivation-like mechanisms indirectly through novel loss functions, regularization methods, or (e.g., curiosity-driven) training strategies can encourage diversity and exploration. These approaches are what afford these systems their unprecedented potential to expand the frontiers of human knowledge and creativity.

However, the drive to outwardly expand human knowledge lies in direct contradiction to the impulse that underlies personalization, which is to narrowly channel information in line with existing inclinations. AI policies should actively promote the capacity of AI systems to help humans critically reflect, evaluate and act on information in new ways, not according to the old ways. Design choices should encourage us to engage in unconventional or divergent thinking, consider alternative perspectives, and pursue explainability to better understand causality. For instance, new research12 suggests that introducing certain forms of “prompt engineering” (crafting user query inputs to guide model outputs) can both enhance AI effectiveness and bolster users’ capacity to assess and gauge system performance. These include prompt strategies that steer AI systems to decompose complex queries into stepwise instructions (instruction generation) and to deliver outputs with stepwise explanations (chain of thought generation). Offering these intermediate reasoning steps on both sides (input/output) of a user’s query can enhance transparency and explainability and enable critical evaluation of outputs.

Such an approach aligns with recent policy suggestions that AI systems provide metadata about system inputs and outputs that permit users to rigorously assess a system’s performance accuracy, reliability, and fairness. Scholars have proposed solutions like putting “nutrition facts labels” on AI outputs13, or implementing design approaches that decelerate heuristic thinking or mitigate cognitive and emotional biases that inhibit users from dispassionate evaluation of facts (e.g., racial bias) or an over-reliance on system results (e.g., automation bias)14. Interactive design features mentioned earlier, including expandable elements, dynamic filtering or resorting, or translation of information to other multimodal formats (e.g., text-to-image; image-to-sound) may serve mutual goals of exposing alternative perspectives while igniting human creativity and imagination.

link