Responsible Artificial Intelligence governance in oncology

The reusable frameworks, processes, and tools that resulted from the design and development phase of our study are shared in this Results section. Using these tools in the implementation phase of the study, we registered, evaluated, and monitored 26 AI models (including large language models), and 2 ambient AI pilots. We also conducted a retrospective review of 33 live nomograms using these approaches. Nomograms are clinical decision aids that generate numeric or graphical predictions of clinical events based on an underlying statistical model with various prognostic variables. Descriptive statistics and use cases shared in this section highlight how these frameworks, processes, and tools come together to support our RAI governance approach in action.

Phase 1 – Design & development: AI task force results

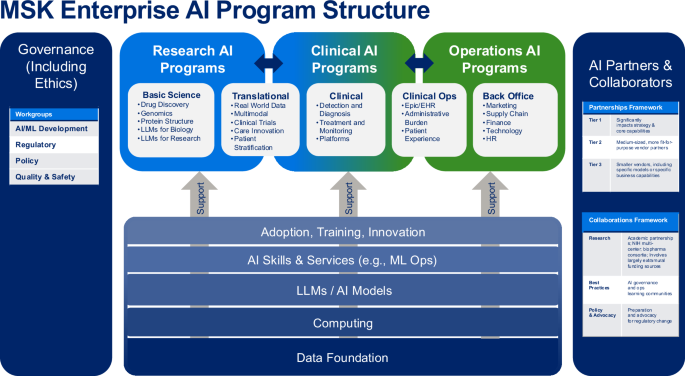

The AI Task Force (AITF) work resulted in our overall AI program framework (see Fig. 1), and the identification of 4 main challenges to be overcome: 1) high-quality data for AI model development, 2) high-performance computing power, 3) AI talent capacity, and 4) policies and procedures. The initial AI Model Inventory completed by the AI TF in Q4 2023 identified 87 active projects (66 research (76%); 15 clinical (17%); 6 operations (7%)) spanning 9 program domains. The leading AI development teams of clinical and operational models at MSK are Strategy & Innovation (9 models), Medical Physics (3 models) and Computational Pathology (3 models). The AITF subgroups identified 22 priorities for future AI-related investment, which were formed into 5 high-level strategic goals. The AITF developed a partnership model (see Fig. 1, right column) for use in evaluating potential AI vendors. The AITF recommended expanding high-quality curated data, by increasing our pace using AI-enabled curation.

Overall AI program structure developed by Memorial Sloan Kettering’s AI Task Force with three programmatic AI domains (Research, Clinical, Operations), supported by governance, partnerships, and underlying infrastructure, skills and processes. MSK = Memorial Sloan Kettering; AI/ML artificial intelligence/machine learning, LLM large language model, HR human resources, EHR electronic health record, ML Ops machine learning operations. Source: Memorial Sloan Kettering.

Phase 1 – Design & development: AI Governance Committee Results

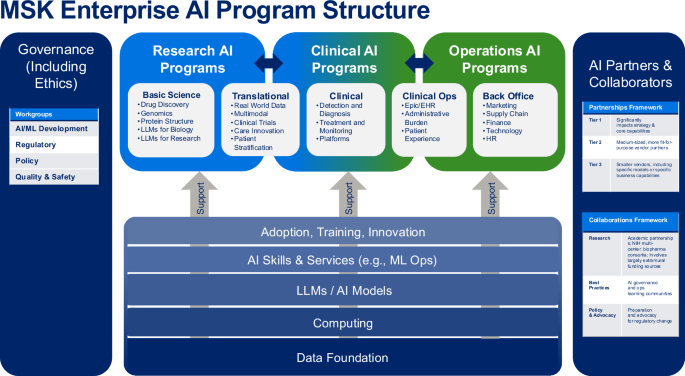

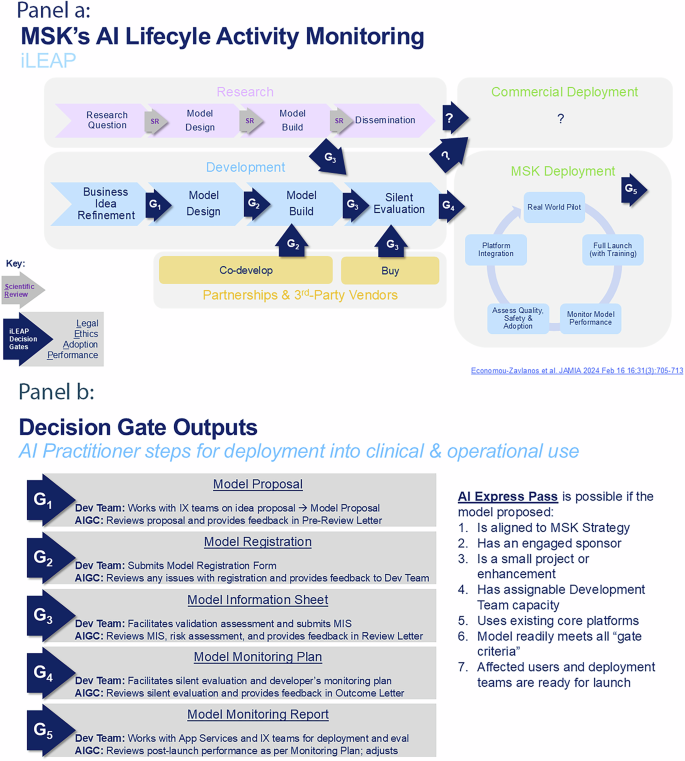

The AI Governance Committee (AIGC) work resulted in our novel oncology AI Lifecycle Management operating model – iLEAP – and its decision gates (“Gx”; see Fig. 2, Panel a). iLEAP stands for Legal, Ethics, Adoption, Performance. It has three main paths for AI practitioners: 1) research (purple path), 2) home-grown build (blue path), and 3) acquired or purchased (orange path). Models developed for research projects are out of scope for the AIGC and governed by existing scientific review processes via MSK’s IRB to ensure scientific freedom and velocity. The AIGC acts as a consultant to the IRB in this path. However, if research models are brought by AI practitioners for translation into clinical or operational use, the AIGC review process is initiated minimally beginning at G3. Decision gate definitions and Express Path criteria are described in Fig. 2, Panel b. The “embedded” AIGC is positioned within the enterprise digital governance structure as shown in Fig. 3). The AIGC developed a Model Information Sheet (MIS – aka “nutrition card”) which is now used to prospectively register all models in our Model Registry that are headed for silent-evaluation mode, pilot or full production deployment (See Table 1 for MIS details). Anticipatable “aiAE’s” are captured at G2 and formalized in the MIS at G3 pre-launch. The Model Registry currently contains 21 MISs. The AIGC AI/ML workgroup adapted and launched a risk assessment model for reviewing acquired and built in-house AI models (see Table 2)32. Model risk scores are a balance of averaged risks factors in the upper part of Table 2, offset by averaged mitigation measures in the lower part of Table 2. Individual risk factor scores are 1-Low, 2-Medium, and 3-High. Mitigation measures are binary 1-present or 0-absent. We also began implementing a validated tool for measuring clinician trust of AI as part of the G5 evaluation toolkit that we developed previously (TrAAIT)33. This trust assessment is used when relevant as part of the “Assess Quality, Safety & Adoption” step within iLEAP G5, as shown in Fig. 2, Panel a.

a End-to-end AI model lifecycle management model employed by the AI Governance Committee with three paths to entry into enterprise-wide deployment (research-purple path; in-house development-blue path; 3rd-party co-development or purchase-orange path). b Decision gate definitions, the outputs of each decision gate, and express path criteria used by the AI Governance Committee for review of each registered model. iLEAP Legal, Ethics, Adoption, Performance, MSK Memorial Sloan Kettering, IX Informatics, Dev Development, MIS Model Information Sheet, App Application, AIGC AI Governance Committee. Source: Memorial Sloan Kettering.

Positioning of the AI Governance Committee as embedded within overall digital governance structures, along with goals, primary activities, and key collaborations with other related committees needed for successful governance of AI models throughout their lifecycle. IRB Institutional Review Board, DAC Digital Advisory Committee, RAI Responsible Artificial Intelligence, MSK Memorial Sloan Kettering, IT Information Technology. Source: Memorial Sloan Kettering.

Phase 2 – Implementation: AI Model Portfolio Management Results

The AIGC treats the overall collection of AI models at various stage gates of development as an actively managed “portfolio”. The Model Registry enables tracking which models are at each iLEAP gate stage as they mature. Part of our iterative refinement process over the last year has been to clarify for AI practitioners what the specific entry and exit criteria are for each gate, such that they are easily explained and achievable. For example, a key transition point is G3 (Model Information Sheet) into G4 (Model Monitoring Plan) — the point at which models get “productionized” after a period of validation in a testing environment (see Fig. 2, Panel a). For AI models exiting G3, sponsors and leads working in collaboration with the AIGC and AI/ML solution team, must have a) completed model registration in the registry, b) specified responses to the online questionnaire for the Model Information Sheet (including FDA Software-as-a-Medical Device (SaMD) screening questions), c) completed a risk assessment with our risk management tool, and d) received a AIGC Review Letter approving moving the model from the testing environment into enterprise production systems. Meeting all the criteria for an “Express Pass” enables a more rapid review by the AIGC and deployment into production (see Fig. 2, Panel b), especially if the AI practitioner is proposing to deploy their model through existing, approved core platforms. A visual of this Express Pass process flow is depicted for Case Study #1 below.

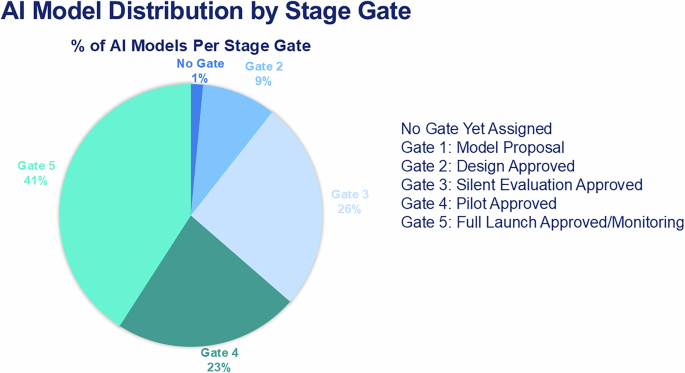

Our Model Registry allows us to track the distribution of models across the iLEAP stage gates (see Fig. 4). The AI/ML Solution Engineering team has supported the development of 19 models year-to-date (see Table 3) and conducted 17 acquired model risk assessments. The AIGC has provided 2 formal “Express Path” Review Letters to sponsors (one each for our Radiology and Medical Physics departments). We reviewed G5 success metrics of 2 Ambient AI pilots, and approved one of these to move to an expanded deployment. We retrospectively reviewed 33 live clinical nomograms with sponsors as part of G5 monitoring, resulting in sunsetting 2 models because clinical evidence has evolved. The Quality & Safety workgroup is planning for the interception and remediation of “aiAE’s” for models in G4-5 stages which will guide the “Assess Quality, Safety & Adoption” step in G5. This will leverage existing voluntary reporting and departmental quality assurance infrastructure to avoid duplicative processes. Trend analysis shows that, overall, AI demand is going up. AI project intake volume was up 63% in calendar year 2024 vs. 2023. Five proposals reviewed for deployment in 2024 were 3rd-party vendor models, compared to 15 proposed and reviewed for deployment in 2025.

Relative distribution of current AI models through the lifecycle management stage gates, which is dynamic from month to month as models mature through the process. AI artificial intelligence. Source: Memorial Sloan Kettering.

Monitoring of the performance and impact of models that go live in production is mission critical in a mature RAI governance framework. The AIGC adopted four main components of evaluation in G5 (Monitoring Report) which are prospectively planned in G4 (Monitoring Plan) with the sponsors and leads for each model. The main components of G5 monitoring are: (1) model performance in “production” as compared to in “the lab” (e.g., precision, recall, f-measure for monitoring drift), (2) adoption (using the TrAAIT tool), (3) pre-specified success metric attainment, and (4) rate of aiAE’s for safety monitoring. Each model is assigned a date for formal AIGC review, usually 6–12 months after go-live or major revision. Evaluation criteria in G5 are tailored to be specific to a model’s risk profile and what it accomplishes in workflow. An example of this in practice is for inpatient clinical deterioration in oncology. Our home-grown Prognosis at Admission model predicts 45-day mortality10. Success metrics focus on goal-concordant care, such as Goal of Care documentation compliance, appropriate referral to hospice, and rate of “ICU days” near the end of life. Of note, this model is planned for re-launch within our new EHR and will be compared to an EHR-supplied deterioration model later this year on these metrics and reviewed by the AIGC. A second example of this in practice is for our Ambient AI deployment. We will be assessing documentation quality, “pajama time”, adoption rate, and cognitive burden, and the AIGC will use that evaluation to determine if Ambient AI’s use should be further expanded.

Phase 2 – Implementation: Case studies of RAI governance in action

To demonstrate our governance approach in real-world use, a recent AIGC agenda (October 2024) and two recent AI model review case studies are described below. Typical AIGC agendas include programmatic updates, 1-2 key topics, new business generated from our intake process, and follow-up and monitoring of models previously reviewed and deployed into production. Our two case studies below are selected to illustrate how our Express Pass works, and to highlight some of the issues of “build vs. buy” using one example of each. Of note, only these 2 of 19 live models (10.5% of models approved for G5 deployment) have met Express Path criteria as of the end of this study period.

The October 2024 AIGC agenda included new business, a key topic, model follow-ups, and a review of two Ambient AI pilots results and associated decisions. The two decisions made by the AIGC for the Ambient AI pilots covered: (1) obtaining informed 2-party consent for these pilots from patients and care teams was approved as consistent with our guiding AI ethics principles (despite New York State law only requiring 1-party consent for recordings), and (2) an expanded Ambient AI pilot was recommended based on the initial G5 Monitoring Report. The new business review included: 1 model at G1, 20 models at G2, and 3 models at G3 (5 developed at MSK; 19 vendor-developed). Our key topic involved finalizing updates to the language in our charter around decision rights to pause or terminate models with degraded performance or safety issues. Lastly, we initiated proactive updates to existing policies and procedures for allowed and prohibited uses of AI via the Policy workgroup.

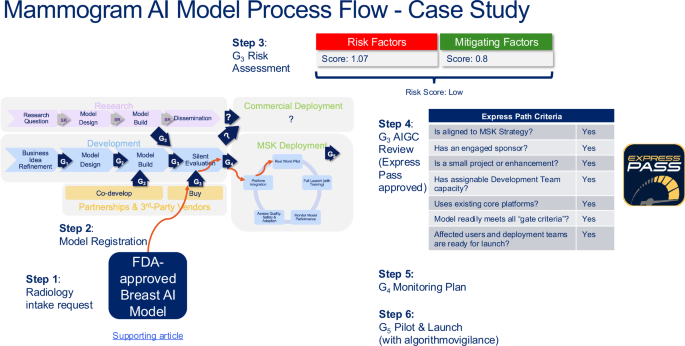

The first case study discusses our AI governance process flow for a radiology FDA-approved AI model by a 3rd party vendor. This AI model uses image-based analysis to identify possible breast cancers. The second case study discusses an internally built AI model by our Medical Physics department. This second model is a tumor segmentation model for brain metastasis built on MRI images.

Our first case study was a “buy/acquire” AI model example from a 3rd-party vendor. Our Radiology Department identified an FDA-approved AI model by a new 3rd party vendor that assists radiologists in triaging mammograms, using image-based analysis to identify possible breast cancers. Radiology asked for an “Express Pass” review (See Fig. 2, Panel b). The model operates on top of our enterprise Picture Archiving and Communication System (PACS), and as an aid to the radiologist doing the interpretation. Intake for the idea proposal was reviewed and refined by the AIGC with the Radiology team, Informatics, and Application Services. Radiology at MSK has experience with developing and implementing AI. The Chair of the department is the Sponsor, and the Chief of the Breast Service is the Lead. The overall risk assessment score using our tool in Table 2 was “low”, driven by an averaged risk factor score 1.07, and mitigated by present measures of human-in-the-loop with the interpreting radiologist, post-go live monitoring reports provided by the model vendor, and FDA approval for the intended use (averaged mitigation measure score 0.8). The Radiology Department has an established quality assurance program which they will leverage in their post-go-live monitoring plan and an internal education plan. The AIGC recommended proceeding to bring this model live, with ongoing monitoring (next G5 check point 6–12 months post-live). The turn-around time for the “Express Pass” review by the AIGC was 2 weeks. MSK’s enterprise Application Services deployed the model for Radiology. A visual summarizing this model’s progression through the iLEAP model is depicted in Fig. 5, including the Risk Assessment, and Express Pass approach.

Real-world example of an acquired FDA-approved radiology AI model from a 3rd-party vendor, and the steps on the path it followed through the lifecycle management stage gates. iLEAP Legal, Ethics, Adoption, Performance; FDA Food and Drug Administration, AIGC AI Governance Committee. Source: Memorial Sloan Kettering.

Our second case study was a “build” AI model example, developed in-house. Our Medical Physics department has a long history of developing and implementing in-house methods for clinical AI for radiation oncology normal tissue contouring, tumor segmentation, and treatment planning, with over 10,000 patient treatment plans facilitated by their in-house developed AI tools13,34. Medical Physics requested the AIGC for an “express path” review of an in-house model they developed for brain metastasis tumor segmentation built on MRI images. Intake for the idea proposal was reviewed and refined by the AIGC with the Medical Physics team, Informatics, and the AI/ML Platform Group. The Chair of the department is the Sponsor, and the Vice Chair is the Lead. The risk assessment score was “medium” driven by use with live patients, but mitigated by Medical Physics’ established quality assurance program for software deployment which was human-in-the loop35. The AIGC recommended proceeding to bring this model live, with ongoing monitoring (next G5 check point 6-12 months post-live). The turn-around time for the “Express Pass” review by the AIGC was 2 weeks. MSK’s Medical Physics department will deploy the model.

link